Here is a sample connection:Ĭonf <- spark_config() conf $ <- "300M" conf $ <- 2 conf $ <- 3 conf $ <- "false" sc <- spark_connect( master = "yarn-client", spark_home = "/usr/lib/spark/", version = "1.6.0", config = conf) Executors page Using yarn-client as the value for the master argument in spark_connect() will make the server in which R is running to be the Spark’s session driver. For more information, please see the Dynamic Resource Allocation page in the official Spark website. Disabling it provides more control over the number of the Executors that can be started, which in turn impact the amount of storage available for the session. Overrides the mechanism that Spark provides to dynamically adjust resources. This property is acknowledged by the cluster if is set to “false”. Number of cores assigned per Executor. The maximum possible is managed by the YARN cluster. If Spark is new to the company, the YARN tunning article, courtesy of Cloudera, does a great job at explaining how the Spark/YARN architecture works. Cluster administrators and users can benefit from this document. The Running on YARN page in Spark’s official website is the best place to start for configuration settings reference, please bookmark it. There are some caps and settings that can be applied, but in most cases there are configurations that the R user will need to customize. Currently, there is no good way to manage user connections to the Spark service centrally. Using Spark and R inside a Hadoop based Data Lake is becoming a common practice at companies. The cluster overrides ‘silently’ - Many times, no errors are returned when more resources than allowed are requested, or if an attempt is made to change a setting fixed by the cluster. Spark configuration properties passed by R are just requests - In most cases, the cluster has the final say regarding the resources apportioned to a given Spark session.

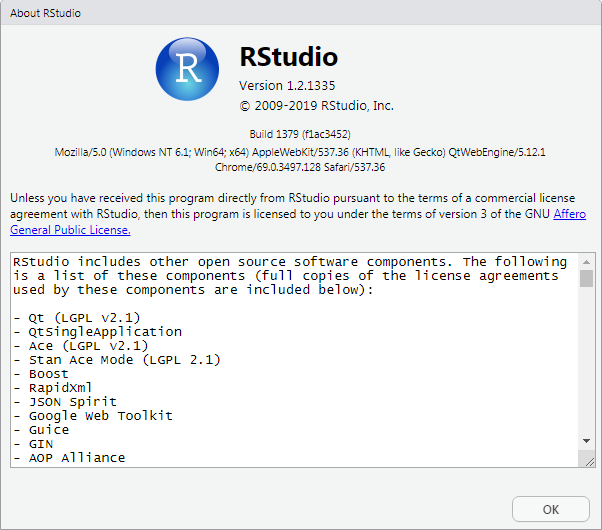

#Check rstudio version driver#

Typically, this will be the server where sparklyr is located.ĭriver (Executor): The Driver Node will also show up in the Executor list. One Node can have multiple Executors.ĭriver Node: The Node that initiates the Spark session. Master Node: The server that coordinates the Worker nodes.Įxecutor: A sort of virtual machine inside a node.

Worker Node: A server that is part of the cluster and are available to run Spark jobs It may be useful to provide some simple definitions for the Spark nomenclature: For more information, please see this Memory Management Overview page in the official Spark website.Ĭonf <- spark_config() # Load variable with spark_config() conf $ <- "16G" # Use `$` to add or set values sc <- spark_connect( master = "yarn-client", config = conf) # Pass the conf variable Spark definitions The default is set to 60% of the requested memory per executor. memory - The limit is the amount of RAM available in the computer minus what would be needed for OS operations. Not a necessary property to set, unless there’s a reason to use less cores than available for a given Spark session. It defaults to using all of the available cores. The following are the recommended Spark properties to set when connecting via R: You can do this using the spark_install() function, for example: Recommended properties

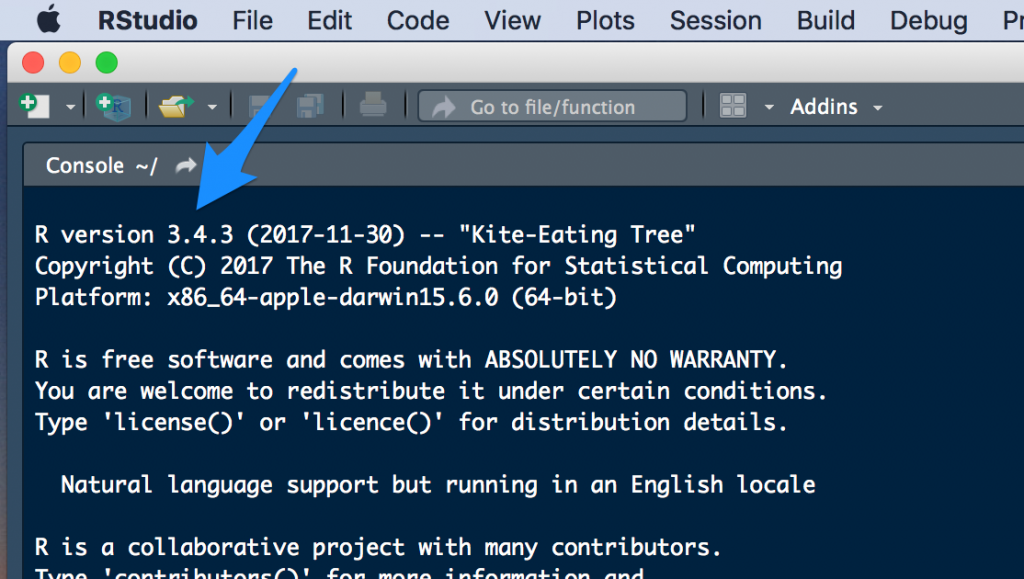

#Check rstudio version install#

To work in local mode, you should first install a version of Spark for local use. Local mode also provides a convenient development environment for analyses, reports, and applications that you plan to eventually deploy to a multi-node Spark cluster. Local mode is an excellent way to learn and experiment with Spark.

0 kommentar(er)

0 kommentar(er)